Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

IRONdb is a distributed time-series database focusing on simplistic operation, resiliency, continued operations in the event of component failure, and embedding analytics and computation.

Although numeric shards can be configured with , this only removes entire shards once they are past the window. In cases where one has all data for a significant number of metrics, the storage space they occupy in rollups may be recovered by performing a compaction of one or more NNTBS shards using a map of active IDs from the .

Compaction is performed by running the shard_compactor tool. It has two required arguments:

-d <nntbs_dir> - The path where NNTBS shards are stored. This is typically

found under /irondb/nntbs, or /snowth/nntbs on deployments hosted by

Circonus. The directory name matches the node's cluster UUID.

current : The current topology in which this node resides.

next : The next topology for this node.

state : Current rebalance state for this node. Value is one of:

TOPO_REBALANCE_IDLE (no rebalance activity)

TOPO_REBALANCE_VOTE (establishing agreement on next hash across the cluster)

TOPO_REBALANCE_REHASH (relocating data)

TOPO_REBALANCE_REHASH_VOTE (waiting for data relocation completion on all nodes)

TOPO_REBALANCE_CLEANUP (removing data from old topology)

TOPO_REBALANCE_COMPLETE (local operations complete, switching to next topology)

TOPO_REBALANCE_COMPLETE_VOTE (waiting for all other nodes to complete and switch to the next topology)

During a rebalance operation, each node will proceed through the above states in order, returning to TOPO_REBALANCE_IDLE when finished. The topology that was listed as "next" is now "current", and "next" is now "-", meaning no next topology.

-s <shard> - The name of a shard to compact. Shards are named for the rollup period and the start and end timestamps that they represent. This option may be specified multiple times to compact more than one shard. Shards will be compacted serially.

Run /opt/circonus/bin/shard_compactor --help for full usage information. The tool must be run as the unprivileged user that IRONdb runs as, typically nobody.

This is an online operation (the IRONdb service must be running). Each shard will be put into an "offline" mode while it is being compacted. Requests for data within the shard will be redirected to other cluster nodes during the operation.

Compaction should only be performed on shards that are no longer getting new data. In other words, shards that are older than the raw database's min_delete_age plus delete_after_quiescent_age.

A surrogate ID map is only valid for the host from which it was obtained, and should never be used for compacting shards on a different host.

Care should be taken to avoid compacting the same shard at the same time on multiple cluster nodes. Doing so may jeopardize the availability of metric data if too many of one shard are offline at once. Since compaction is a background maintenance task, it is preferable to run it on one node at a time.

Given an IRONdb node whose cluster ID is 84d2979a-f233-47d3-9a15-d4f8885c9b7c:

$ sudo -u nobody /opt/circonus/bin/shard_compactor \

-d /irondb/nntbs/84d2979a-f233-47d3-9a15-d4f8885c9b7c \

-s 60_1551432000-1552041600 \

-s 60_1552041600-1552651200Starting with release 0.12, IRONdb supports tracking of metric activity without the expense of reading all known time series data to find active ranges. The activity of a metric is tracked at a 5 minute granularity. Any ingestion of a metric will mark that 5 minute period that the timestamp falls into as active for that metric. Activity periods are stored in the surrogate database.

This activity tracking also coalesces nearby active ranges. Any activity on a metric within an 8 hour window marks that metric as active for that 8 hour span. For example, if you have a metric that arrived with the timestamp: 2018-07-03T11:00:01:123Z and then nothing else arrived until 2018-07-03T19:00:02:123Z, the metric would be considered inactive in the 8 hour span between these 2 timestamps. If, later, some late data arrives and we see a timestamp at: 2018-07-03T14:00:01:123Z, then the entire 8 hour span is considered active for purposes of querying.

See Searching Tags on how to query activity periods for a given list of metrics.

This activity tracking only applies to data ingested after the upgrade to 0.12 or later. Any data ingested prior to installation of 0.12 will be invisible to the activity tracking code. However, IRONdb also ships with an API to rebuild activity tracking data by reading the actual datapoints for a metric to determine its activity ranges. Since this is an expensive operation it has to be triggered for a list of metrics by an operator.

Do not trigger this API until you have upgraded all IRONdb nodes to

0.12or later.

/surrogate/activity_rebuild

POST

A JSON document which lists the set of metrics to rebuild activity data for, with the syntax:

The above will rebuild activity for the 3 metrics listed in the document.

IRONdb supports remote write and read capabilities to provide long-term metric storage for Prometheus deployments. One IRONdb cluster can support many individual Prometheus instances.

Both read and write requests to IRONdb can safely go to any node in an IRONdb cluster. To ensure high availability and distribute load, users are encouraged to put a load balancer between the Prometheus nodes and the cluster.

IRONdb has native endpoints for accepting remote write data from a Prometheus installation. Once the Prometheus module is enabled, data can be sent to IRONdb by setting the Prometheus remote_write endpoint to:

http://irondbnode:8112/module/prometheus/write/<accountid>/<uuid>

Prometheus data is not namespaced by nature. This can create confusion if different copies of Prometheus have identically named metrics. Inside of IRONdb, we require that all data be namespaced under a UUID. This UUID can be created using uuidgen on a typical UNIX(like) system or via any external tool or website that generates UUIDs. Each distinct set of Prometheus data should have its own UUID. For high-availability in Prometheus it is the recommended pratice to have two copies collecting the same data. While these two instances do not contain the same data, they do represent the same metrics, and so should share a common UUID for their namespace. One may wish to send both of these instances into IRONdb where they simply become more samples in the given metric stream.

All metrics live under a numeric identifier (one can think of this like an account ID). Metric names can only be associated with one "account ID". This allows separate client instances that completely segregate data.

To configure a Prometheus instance to write to IRONdb the Prometheus YAML configuration file will need to be updated. The remote_write section's url field should be set to http://irondbnode:8112/module/prometheus/write/<accountid>/<uuid>.

This should look something like:

To configure a Prometheus instance to use IRONdb as a remote datasource, the Prometheus YAML configuration file will need to be updated. The remote_read section's url field should be set to http://irondbnode:8112/module/prometheus/read/<accountid>/<uuid>.

This should look something like:

But with an account ID and UUID value matching what was configured in the remote write URL.

In contrast to or , operational needs may call for migrating a cluster to a new set of machines entirely. This may be due to hardware lifecycle requirements and/or the desire to modify the topology all at once.

As with individual node reconstitution, this is a "pull"-type operation, where the new cluster's nodes pull the necessary metric data from the source cluster. The following procedure will be run on each of the new cluster's nodes. Multiple new-cluster nodes can reconstitute simultaneously if the source cluster has sufficient read capacity, but exercise care, since every reconstituting node will read from every source cluster node.

Reconstitution requires that at least one replica of every metric stream stored on the existing cluster be available. A reconstitute operation cannot complete if more than W-1 nodes of the existing cluster are unavailable (

Wwrite_copiesFor example, given a cluster of 10 nodes (N=10) with 3 write copies (W=3), a new cluster may be reconstituted if at least 10-(3-1), or 8, of its nodes are available and healthy.

As this can be a long-running procedure, a terminal multiplexer such as tmux or screen is recommended to avoid interruption.

On each of the new cluster nodes, after installing and configuring the new topology, perform the following steps to reconstitute each of the new nodes from the source cluster.

Make sure there is no lock file located at /irondb/logs/snowth.lock. If there is, remove it with the following command:

Note the topology hash from the source cluster. This is the value of the active attribute in /opt/circonus/etc/topology.conf on one of the source cluster's nodes. The hash will be referred to below as <source_cluster_topo_hash>.

Run IRONdb in reconstitute mode using the following command:

where the argument to -O is the IP address and port of a node in the source cluster. The port is the cluster API port, typically 8112. The reconstitute will get the topology information from the source cluster node using the specified topology. Actual metric data fetches will be done against all source cluster nodes, using the topology information to determine the primary owner of each metric stream.

Wait until the reconstitute operation has fetched 100% of its data from the source cluster. You can access the current percentage done at:

In reconstitute mode, the normal UI is replaced with a special one giving reconstitute status. Note that there may not be messages appearing on the console while this runs. This is normal; do not stop the reconstitute. Current progress will be saved - if the process stops for any reason, everything should pick back up approximately where it was. If the download stops partway for any reason, you may resume it with the following command:

Once the reconstituting node has retrieved all of its data, you will see the following on the console:

[

{

"check_uuid":"1fd7c873-0055-4bd3-a16a-2137b111e71a",

"metric_name":"foo"

},

{

"check_uuid":"1fd7c873-0055-4bd3-a16a-2137b111e71a",

"metric_name":"bar"

},

{

"check_uuid":"1fd7c873-0055-4bd3-a16a-2137b111e71a",

"metric_name":"baz|ST[a:b,c:d]"

}

]remote_write:

- url: "https://irondbnode:8112/module/prometheus/write/1/321b704b-a8ff-44b7-8171-777dc49bc788"remote_read:

- url: "https://irondbnode:8112/module/prometheus/read/1/321b704b-a8ff-44b7-8171-777dc49bc788"rm -f /irondb/logs/snowth.lock/opt/circonus/bin/irondb-start -B -E \

-T <source_cluster_topo_hash> \

-O <source_cluster_node_ip>:<port>http://<node ip address>:<node port>//opt/circonus/bin/irondb-start -B \

-T <source_cluster_topo_hash> \

-O <source_cluster_node_ip>:<port>Reconstitute Finished!This is intended as a general guide to determining how many nodes and how much storage space per node you require for your workload. Please contact Apica if you have questions arising from your specific needs.

T is the number of unique metric streams.

N is the number of nodes participating in the cluster.

W is the number of times a given measurement is stored across the cluster.

For example, if you have 1 GB of metric data, you must have W GB of storage space across the cluster.

The value of W determines the number of nodes that can be unavailable before metric data become inaccessible. A cluster with W write copies can survive W-1 node failures before a partial data outage will occur.

Metric streams are distributed approximately evenly across the nodes in the cluster. In other words, each node is responsible for storing approximately (T*W)/N metric streams. For example, a cluster of 4 nodes with 100K streams and W=2 would store about 50K streams per node.

Nodes should be operated at no more than 70% capacity.

Favor ZFS striped mirrors over other pool layouts. This provides the highest performance in IOPS.

W must be >= 2

N must be >=

The system stores three types of data: text, numeric (statistical aggregates), and histograms. Additionally there are two tiers of data storage: near-term and long-term. Near-term storage is called the and stores at full resolution (however frequently measurements were collected.) Long-term resolution is determined by the .

The default configuration for the raw database is to collect data into shards (time buckets) of 1 week, and to retain those shards for 4 weeks before rolling them up into long-term storage. At 1-minute collection frequency, a single numeric stream would require approximately 118 KiB per 1-week shard, or 472 KiB total, before being rolled up to long-term storage.

These numbers represent uncompressed data. With our default LZ4 compression setting in ZFS, we see 3.5x-4x compression ratios for numeric data.

The following modeling is based on an observed distribution of all data types, in long-term storage, across many clients and may be adjusted from time to time. This would be in addition to the raw database storage above.

All sizing above represents uncompressed data.

Suppose we want to store 100,000 metric streams at 1-minute resolution for 5 years. We'd like to build a 4-node cluster with a W value of 2.

Apica recommends server-class hardware for all production deployments. This includes, but is not limited to, features like ECC memory and hot-swappable hard drives.

See for general advice.

Specifically, hardware RAID should be . ZFS should be given access to raw hard drive devices whenever possible.

In addition to the overall storage space requirements above, consideration must be given to the IOPS requirements. The minimum IOPS required is the primary write load of ingesting metric data (approximately 12 bytes per measurement point), but there is additional internal work such as parsing and various database accounting operations that can induce disk reads beyond the pure writing of measurement data. After initial ingestion there are other operations, such as searching, rollups, and maintenance activity like reconstitution and ZFS scrubbing that require additional IOPS. Ensure that the hardware you choose for your nodes has the capacity to allow for these operations without significantly impacting ongoing ingestion.

ZFS's helps by absorbing some portion of the read load, so the more RAM available to the system, the better.

The following are sample profiles to guide you in selecting the right combination of hardware and cluster topology for your needs.

Assumptions:

10-second collection frequency

4 weeks of near-term (full-resolution) storage

2 years of historical data at 1-minute resolution

striped-mirror ZFS pool layout

If an IRONdb node or its data is damaged or lost, its data may be rebuilt from replicas elsewhere in the cluster. This process is known as "reconstituting" a node.

Reconstitution requires that at least one replica of every metric stream stored on the reconstituting node be available. A reconstitute operation cannot complete if more than W-1 nodes are unavailable, including the node being reconstituted (W is the number of write_copies configured for the current topology.)

For example, given a cluster of 10 nodes (N=10) with 3 write copies (W=3), a node may be reconstituted if at least N-(W-1), or 8, other nodes are available and healthy.

As this can be a long-running procedure, a terminal multiplexer such as tmux or screen is recommended to avoid interruption.

Log into the IRONdb node you wish to reconstitute as root or a privileged user. Make sure the IRONdb package is .

Note: If the entire old node was replaced (e.g., due to a hardware failure), or the ZFS pool has been recreated (due to hardware failure or administrative action), then you should repeat and then . The installer will not interfere with an existing irondb.conf file but will ensure that all necessary ZFS datasets and node-id subdirectories have been created.

Make note of this node's topology UUID, found in the

This API call is for deleting all of the data from an IRONdb node for a specific metric or for a set of metrics (when a tag query is specified). It will remove data for the matching metric(s) throughout all timestamps and all rollups that have been provided by the user, no matter what the data type. In addition, it will remove all record of the metric name(s) with their tags and metadata. This call is intended for removing misnamed/experimental metrics or old metrics which are obsolete and can be safely removed.

When used for deletion of a single metric, this call will return a JSON object that reports if the request succeeded or not.

When used with wildcards or a tag query, this call always returns a JSON object which describes the matching metrics and the actions taken or errors received on the deletion. A list of the possible result statuses for each metric and what they mean can be found here. For safety, explicit confirmation is required in the headers to actually force the data deletion.

It is highly recommended to perform the deletion API call without confirmation as a first step, in order to review what would actually be deleted (and hopefully avoid accidentally deleting more data than intended).

Deletion is currently only supported on a single node per API call. To delete data from the entire cluster, issue the same API call to each node.

API description: See "Data Deletion" in the

In this example:

full : This tells the system that full data and metadata will be removed for the specified metric.

canonical : This tells the system to delete a single metric that matches the given UUID and metric name.

1234 : Delete data only for the given account id

Sample Output for Single Metric Example

In this example:

full : This tells the system that all data and metadata for the matching metrics will be removed.

tags : This tells the system that this is a tag query.

1234 : Delete data only for the given account id

Sample Output for Query Example

When doing a delete which could affect multiple metrics, the returned JSON response will indicate the final status for each metric which matched the request. A list of these statuses and a description is given below. Note that, in many cases, the "payload' field will contain further details.

Bad request : The URI did not conform to expected syntax or inputs for the API

Deleted : Data was found and the deletion completed successfully

Found : Data was found that can be deleted if request is submitted again with delete confirmation

2025-09-02

Add support for Prometheus data - both an API endpoint and from Kafka using the libmtev Kafka module.

Added ability to configure irondb-relay to drain journals on shutdown instead of just exiting. The default is still to just exit.

2025-04-01

Update error logging to be more accurate and provide more detail.

2025-03-07

Update Docker base image to be Ubuntu 22.04.

Improve graphite read error messages.

2024-03-27

Update libmtev dependency, which fixes potential memory corruption issues.

2024-01-31

Add TLS support

2024-01-25

Fix Docker build to bust apt caches and avoid errors.

Update setup script to better support HTTPS URLs in the bootstrap list.

Add C++ guards to headers and convert send code to C++ to take advantage of libsnowth features.

2023-11-06

Add Docker support.

2023-09-05

Use new libsnowth_init function to avoid potential buffer overflow.

2023-06-06

Remove unused DH parameter files from configuration.

2023-03-06

Fix simdjson linking.

2022-09-14

Fix log rotation.

2022-06-09

Initialize metric_t structures to avoid data corruption.

2022-02-07

Replace deprecated mtev_atomic* types and functions with compatibles ones from ConcurrencyKit (libck).

2022-02-04

Fix an issue where some jlog subscribers were not advanced when they did not have work to do. This led to increased disk usage from processed segments that could not be removed.

2021-04-09

Bring setup and start scripts into the repo.

2021-03-24

Improved error handling/data parsing.

Accept UTF-8 Graphite data.

Move debug/parsing log to debug/parsing/graphite and add error/parsing/graphite log to catch parsing errors.

Each IRONdb node exposes a wealth of information about its internal operation. There are two ways to obtain this data: pulling JSON from a REST stats endpoint, or having IRONdb push its own stats into a particular account/check using a loadable module. In both cases, the metrics exposed are the same.

The types of statistics available are described in the section of the Operations page.

The JSON endpoint is best for viewing live information. The internal monitor module is best suited to long-term trending in standalone IRONdb deployments. Its metrics may be retrieved using one of the type-specific .

Both methods are described below.

JSON-formatted metrics are available from two REST endpoints, each having two format options:

In the following guide we will demonstrate a typical IRONdb installation on Linux, using ZFS.

The raw API accepts direct input of measurement data at arbitrary frequencies. It stores every measurement as it was received, for a configurable amount of time, before aging it out to a rollup format.

Metric records are in one of several formats, and are accepted as either tab-separated values or as FlatBuffer messages.

API description: See "Data Submission" in the

The essential steps to changing the topology of an existing IRONdb cluster are as follows:

Create your new topology.

Load the new topology to all nodes that will be part of the new cluster.

Start the "rebalance" operation on each node, which begins the migration of metric data to the new topology. Depending on the amount of stored data, this process may take a long time.

Rebalancing involves recalculating the node ownership for each individual metric stream, and then sending that stream to the new owning node. All metric data remain available during a rebalance, under the old topology. New, incoming metric data is replicated to

<ingestion max_allowable_days_before_current_time="<num days">/> and <ingestion max_allowable_days_after_current_time="<num_dats">/>. A value of 0 means no limit.WW should be >= 3 when N >= 6

W should be >= 4 when N >= 100

10MM

3

30MM

15

2MM

24

256

24x 4T

100MM

3

300MM

75

4MM

36

384

45x 4T

10 seconds

120,000 bytes

43,020,000 bytes

1 minute

20,000 bytes

7,170,000 bytes

5 minute

3,800 bytes

1,386,000 bytes

1MM

3

3MM

5

600K

12

128

6x 2T

6f6bdc73-2352-4bdc-ab0e-72f66d0dee12 : Check UUID

example : Metric name

1 : Confirm to actually commit to the deletion (we highly recommend omitting this header at first, to examine what will be deleted)query : See Tag Queries for more info on tag queries.

6f6bdc73-2352-4bdc-ab0e-72f66d0dee12: The UUID to match.

multiple_example*: The metric name to match, with * denoting a wildcard.

Invalid range : An argument is not within the proper range of allowable values

No content : No data to be deleted was found (prior to the end time if not full delete)

Not found : The metric name was not found

Not implemented : The supplied request is not currently implemented

Not local : The metric's data is not stored or replicated on this node of the cluster

Redirected : The request for deletion was forwarded to another node(s)

Server error : An error occurred while performing the deletion

Unable busy : The deletion request cannot be performed currently, please try later

Undefined : The result code is unknown and not valid

If you are new to ZFS, there are some basic concepts that you should become familiar with to best utilize your server hardware with ZFS.

References:

ZFS: The Last Word in Filesystems Old but still largely relevant presentation introducing ZFS, from Sun Microsystems

Pools are the basis of ZFS storage. They are constructed out of "virtual devices" (vdevs), which can be individual disks or groupings of disks that provide some form of redundancy for writes to the group.

Review the zpool man page for details.

Datasets are logical groupings of objects within a pool. They are accessed in one of two ways: as a POSIX-compliant filesystem, or as a block device. In this guide we will only be dealing with the filesystem type.

Filesystem datasets are mounted in the standard UNIX hierarchy just as traditional filesystems are. The difference is that the "device" part of the mount is a hierarchical name, starting with the pool name, rather than a device name such as /dev/sdc1. The specific mountpoint of a given filesystem is determined by its mountpoint property. See the zfs man page for more information on ZFS dataset properties.

Please note that IRONdb setup configures all necessary datatset properties. No pre-configuration is required.

On Linux, ZFS filesystems are mounted at boot by the zfs-mount service. They are not kept in the traditional /etc/fstab file.

Packages for ZFS are available from the standard Ubuntu repository.

IRONdb setup expects a zpool to exist, but will take care of creating all necessary filesystems and directories.

For best performance with IRONdb, consider using mirror groups. These provide the highest number of write IOPS, but at a cost of 50% of available raw storage. Balancing the capacity of individual nodes with the number of nodes in your IRONdb cluster is something that Apica Support can help you with.

In our example system we have 12 drives available for our IRONdb pool. We will

configure six 2-way mirror groups, across which writes will be striped. This is

similar to a RAID-10 setup. We will call our pool "data". To simplify the

example command we are using the traditional sdX names, but it's recommended

that you usedifferent identifiers

for your devices that are less susceptible to change and make it easier to

maintain.

Using the zpool status command we can see our new pool:

At this point you may wish to reboot the system to ensure that the pool is present at startup.

This step is only required if using the standalone IRONdb product. If you are referring to this appendix as an on-premise Apica Inside user, there is no further manual setup required at this point. All IRONdb setup from this point is handled by the Apica Inside installer.

Now that you have created a ZFS pool you may begin the IRONdb installation. If you have multiple pools configured and you want to use a specific pool for IRONdb, you can use the -z option to the setup script.

The setup script takes care of creating the /irondb mountpoint and all other necessary filesystems, as well as setting the required properties on those filesystems. No other administrative action at the ZFS level should be required at this point.

T=100,000

N=4

W=2

T * 7,170,000 (bytes/year/stream) * 5 years = 3,585,000,000,000 bytes

3,585,000,000,000 bytes / (1024^3) = 3338 GiB

T * 483,840 (bytes/4 weeks raw/stream) / (1024^3) = 45 GiB

( (3338+45) * W) / N = 1692 GiB per node

1692 GiB / 70% utilization = 2417 GiB of usable space per node

2417 GiB * 2 = 4834 GiB of raw attached storage in ZFS mirrors per nodecurl -X DELETE \

-H 'x-snowth-account-id: 1234' \

http://127.0.0.1:8112/full/canonical/6f6bdc73-2352-4bdc-ab0e-72f66d0dee12/example{ "status": "succeeded" }curl -X DELETE \

-H 'x-snowth-account-id: 1234' \

-H 'x-snowth-confirm-delete: 1' \

http://127.0.0.1:8112/full/tags?query=and(__check_uuid:6f6bdc73-2352-4bdc-ab0e-72f66d0dee12,__name:multiple_example*)[ {"metric_name":"multiple_example_cpuutil_server1","delete_result":"not local","payload":""},

{"metric_name":"multiple_example_cpuutil_server2","delete_result":"ok","payload":""},

...

]sudo apt-get update

sudo apt-get install zfsutils-linuxzpool create data \

mirror sdc sdd \

mirror sde sdf \

mirror sdg sdh \

mirror sdi sdj \

mirror sdk sdl \

mirror sdm sdn pool: data

state: ONLINE

scan: none requested

config:

NAME STATE READ WRITE CKSUM

data ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

sdc ONLINE 0 0 0

sdd ONLINE 0 0 0

mirror-1 ONLINE 0 0 0

sde ONLINE 0 0 0

sdf ONLINE 0 0 0

mirror-2 ONLINE 0 0 0

sdg ONLINE 0 0 0

sdh ONLINE 0 0 0

mirror-3 ONLINE 0 0 0

sdi ONLINE 0 0 0

sdj ONLINE 0 0 0

mirror-4 ONLINE 0 0 0

sdk ONLINE 0 0 0

sdl ONLINE 0 0 0

mirror-5 ONLINE 0 0 0

sdm ONLINE 0 0 0

sdn ONLINE 0 0 0

errors: No known data errors/opt/circonus/bin/setup-irondb (other options) -z data<node_id>.If the IRONdb service is running, stop it.

Make sure there is no lock file located at /irondb/logs/snowth.lock. If there is, remove it with the following command:

If you repeated initial installation on this node, you may skip to the next step. Otherwise, follow this procedure to clean out any incomplete or damaged data.

Run the following command to find the base ZFS dataset. This will create a shell variable, BASE_DATASET, that will be used in subsequent commands.

Destroy the existing data using the following commands:

Wait for the data to be completely destroyed. To do this, periodically run the following command and wait until the value for all pools reads "0".

Recreate the dataset structure by running the following commands:

Run the following commands to make the node-id subdirectories:

Make sure that all the directories are owned by the nobody user by running the following:

Run IRONdb in reconstitute mode using the following command:

Wait until the reconstitute operation has fetched 100% of its data from cluster peers. You can access the current percentage done as an auto-refreshing UI via:

or as raw JSON at:

...and looking at the "reconstitute" stats.

Note: There may not be messages appearing on the console while this runs. This is normal. Do not stop the reconstitute. Completion percentages may pause for long periods of time during reconstitution.

Current progress will be saved - if the process stops for any reason, everything should resume approximately where it was. A reconstitute may be resumed with the same command:

Once the reconstituting node has retrieved all of its data, you will see the following on the console:

http://<nodename or ip>:8112/stats.json or /stats.json?format=tagged

http://<nodename or ip>:8112/mtev/stats.json or /mtev/stats.json?format=tagged

The first endpoint provides application-level statistics, such as database performance, replication latencies, topology info, etc. These are the same metrics that are visible in the UI Internals tab Stats pane under the snowth. namespace.

The second endpoint provides libmtev framework statistics, such as job queue latencies, memory management, and REST API latencies. These are the same metrics that are visible in the UI Internals tab Stats pane under the mtev. namespace.

The format options are discussed below.

Changing an existing check against the default format to tagged format, or vice versa, will result in different metric names, even though the data represented is the same.

The default format for metric names is hierarchical. The broadest category of statistics is the top level, descending to more specific sub-categories, and finally listing individual metrics.

For example, the raw database PUT latency histogram metric is represented in the default format as:

which results in a metric named:

There are no tags in the default format.

The tag format is still in development. Names of metrics and tags may change without warning.

If provided the query string format=tagged, both endpoints will produce metrics with stream tags instead of the hierarchy used in the default format. The same metric from above is represented in tagged format as:

which results in a metric named latency with tags indicating the database type (raw) and the type of operation (put) that are encoded in the metric name in the default format. There are additional tags for the node's UUID and a "units" tag indicating what the metric's value represents. In this case it is seconds.

This module is still in development. Names of metrics and tags may change without warning.

The internal monitor module exports all of the same statistics (both application and libmtev framework) as the JSON endpoints above. It records them in the tagged format (described above) under a designated account ID and check UUID. The module may be configured to store these metrics at intervals ranging from 1 second to several minutes or more.

Metrics stored by the monitor module are replicated to additional nodes (if any) in the same way as metrics ingested from outside.

The monitor module is not enabled by default. To enable it, add the following

configuration to /opt/circonus/etc/irondb-modules-site.conf, generate a new

UUID and use it to replace the null uuid in the example, and then restart the

IRONdb service:

This file will preserve local edits across package updates.

Available configuration parameters:

uuid (required): The check UUID under which the module's metrics should be stored. This cannot be the null UUID (all 0s).

account_id (optional): The account ID with which to associate the module's metrics. Default: 1

period (optional): The collection period for metrics. Specified as an integer suffixed by one of (ms|s|min|hr). Minimum value is 1 second. Default: 60s

filter (optional): The specifying which metrics are stored by the module. If no filter is specified, all metrics will be stored. Default: No filter

The check UUID is an identifier for grouping the internal metrics together. It is recommended that you choose a UUID that is different from any associated with Graphite, Prometheus, or OpenTSDB listener configurations. This will ensure that the internal metrics are not mixed in with your external time series data. Likewise, account_id may be used as another level of segregation, or you may choose to leave the metrics in the same account ID as your other metrics.

To get a list of metrics recorded by the module, perform a tag query using the synthetic __check_uuid tag:

The search results may be narrowed by including additional tags. In the following example, we are looking for the latency of raw-database PUT operations:

which produces this result:

The metric is reported to be a histogram, so using the histogram read API we can fetch some data for this metric. We need to URL-encode the metric name since it contains some characters that are not allowed in URLs.

Result:

Raw metric records may be submitted in one of several formats, depending on the type of metric data contained within.

Individual numeric or text metrics submitted to the raw endpoint as lines of ASCII characters use the following format, referred to as an M record:

Components are separated by TAB characters. Multiple records may be sent in the same operation, separated by newline ().

M : Denotes an M record.

TIMESTAMP : An epoch timestamp recording the time of the observation, with milliseconds. In terms of format, it is %lu.%03lu, i.e., 1516820826.120. While this might look like a float, it is, in fact, a strict textual format that requires exactly three digits after the decimal point. These must always be included, even if they are 000.

UUID : An identifier of the account and check to which this metric belongs. Despite its name, this identifier must be in the form:

TARGET is conventionally the IP address of the check target, but may be any meaningful string identifying the subject of the check.

MODULE is conventionally the name of the .

NAME : The of this metric.

TYPE : The type of data that the VALUE represents:

i: int32

I: uint32

VALUE : The value observed. VALUE is always a string or [[null]] (never encoded/packed).

Numeric measurements which collide on TIMESTAMP/UUID/NAME will store the largest absolute value for that time period, by default. This behavior is configurable via the

conflict_resolversetting for the raw database.

A sample M record:

This is a metric, duration, on account 123, for the HTTP check 1b988fd7-d1e1-48ec-848e-55709511d43f with a TYPE of uint32 (I) and a VALUE of 1.

Histogram submission is similar to M records above, but instead of a single-value payload, a base64-encoded serialization of the histogram structure is used. This is referred to as an H1 record. As with M records, the components are tab-separated.

TIMESTAMP : Same as with M records above.

UUID : Same as with M records above.

NAME : Same as with M records above.

HISTOGRAM : A base64-encoded, serialized histogram. See the

hist_serialize() function in * ,

the reference implementation of histograms in Circonus.

A sample H1 record:

This is a histogram of values for the metric maximum, on an ICMP check for account 123.

A FlatBuffer metric payload is submitted as a MetricList as specified in the Reconnoiter FlatBuffer source.

When submitting FlatBuffer-encoded metrics, a client must set the HTTP headerContent-Type to application/x-circonus-metric-list-flatbuffer and set the

HTTP header X-Snowth-Datapoints to the number of data points within the raw

submission.

Download the desired release version.

Unzip into plugins directory.

Restart Grafana.

git clone https://github.com/circonus-labs/circonus-irondb-datasource.git into plugins directory.

Restart Grafana.

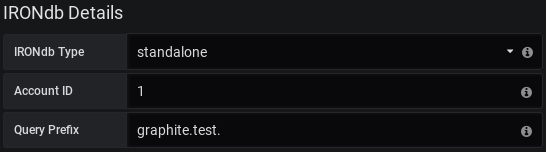

Create a new datasource and select IRONdb from the Type drop down.

Standalone: If this datasource will access a standalone IRONdb cluster, then this is the URL where IRONdb can be accessed. Example: http://nodename:8112, or if TLS is in use, https://nodename:8443.

Hosted: If this datasource will access data through Circonus, then the URL

should be set as: https://api.circonus.com

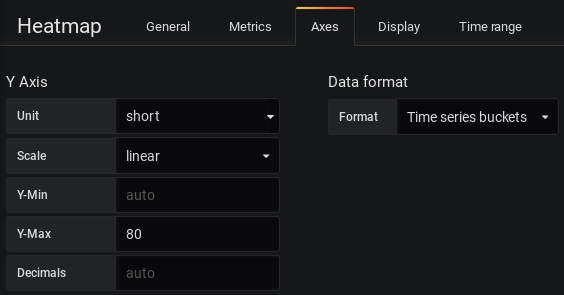

Change the IRONdb configuration options at the bottom of the datasource configuration page.

Standalone: An IRONdb cluster accessible directly, requires entry of Account ID.

Hosted: An IRONdb instance hosted by Circonus, requires entry of API token.

Depending on which of the above configurations you've chosen, you will either be presented with Account ID or API Token configuration options detailed below.

The Account ID associated with the account to pull metrics from.

The API Token associated with the account to pull metrics from. This can be found on your API Tokens page after logging in at https://login.circonus.com/ in the "Integrations" -> "API Tokens" section.

Note: Be sure to log into Circonus and change the Grafana App State to "allow" if that isn't the default for the provided API Token.

Create a new panel and set the datasource to name selected in the IRONdb datasource configuration.

For standard Circonus metric queries, use the metric browser to navigate the metric hierarchy of your IRONdb instance or type queries manually using the Toggle Edit Mode menu item to the right.

CAQL queries must be entered manually by selecting the CAQL checkbox or switching manually to the editor mode.

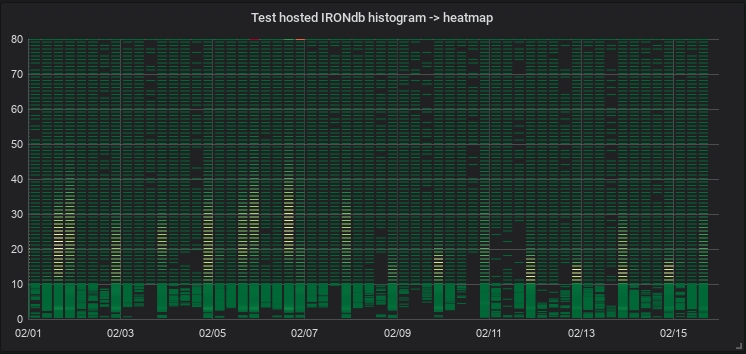

To visualize a histogram, you must search for the metric using find:histogram, for example:

For this processed data to be displayed on the heatmap panel as the sample above, select Time Series Buckets as the Data Format to be used on the Heatmap panel.

How to Configure a Template Variable for IRONdb

From a dashboard, click Settings in the top right.

On the left hand side, select the Variables section.

Click +New and choose a name for your new variable.

Select the proper data source: IRONdb.

Under Query, enter the metric you wish to use in this variable (without tags).

Enable Include All Option and enter * for Custom all value.

Click Enabled under Value groups/tags to enable tags support.

Enter the tag category you wish to use in your variable under Tag values query.

If you successfully completed the prior steps, Preview of values should now auto-complete the tag values.

Finish setup by clicking Add and then Save.

Your new template variable should now appear in the query builder!

The build process requires node, npm, yarn, typescrypt, and tslint

On Cent7 setup:

This content was sourced from the README on GitHub.

After all nodes complete the rebalance, they will switch their active topology from old to new.

A helper tool exists to simplify the procedure, and its use is illustrated below. Both additions and removals may be performed in the same operation, subject to the restrictions stated in the Caveats section below.

The helper tool utilizes the IRONdb REST API which, by default, listens on TCP port 8112. See the Rebalancing APIs reference for details. The helper tool is not necessary in order to perform a resize; the same operation may be performed using the APIs directly.

Rebalance cannot be used to transform a cluster with no sides into a sided cluster, or vice versa. Such a change requires migrating to a new cluster.

When removing nodes from a cluster, no more than W-1 (one less than the number of write copies) nodes may be removed in a rebalance operation. For example, a cluster with W=3 may have a maximum of 2 nodes removed at a time. Removing more than this number of nodes could jeopardize data availability.

If resizing a sided cluster, the new cluster topology must still have at least W/2 (half the number of write copies) nodes on each side, to ensure that the promise of metric distribution across sides can be maintained. For example, a sided cluster with W=3 must still have at least 2 nodes on each side in the new topology (fractional values are rounded up to the nearest integer.)

During a rebalance operation, the existing cluster nodes all send their portions of the relocating metrics to the new node(s) simultaneously. Depending on the topology and the amount of existing metric data, this may be too much for the incoming node(s) to handle. If this is the case, the transfers maybe done sequentially by adding the following line to irondb.conf, just before the closing </snowth> line:

This will make the overall operation take longer to complete, but should avoid overwhelming the incoming node(s).

This value will only take effect at the start of a rebalance operation, and will be ignored if changed while a rebalance is ongoing. To abandon a rebalance operation, see the last item of either Adding Nodes or Removing Nodes below.

An existing IRONdb cluster has two nodes with write factor of 2. A new node is prepared by running the installation which creates a standalone node with its own topology. We want to combine these three nodes together to create a three-node cluster, maintaining 2 write copies.

We will use the cluster resizing tool, /opt/circonus/bin/resize_cluster. Run this with the -h option for details on the available options.

Choose one of the existing cluster nodes and note its IP address and API port. This will be the "bootstrap node" from which the resize tool will fetch the existing cluster's topology. If you do not specify the API port, the default (8112) will be assumed.

Note the new node's IP address and node UUID, and, if the cluster is sided, whether the node will be added to side "a" or "b".

Run the resize tool, specifying the new node with a comma-separated tuple of IP address, node ID, and optionally a side. If adding more than one node, specify the -a option multiple times.

/opt/circonus/bin/resize_cluster -b <bootstrap_node_ip[:port]> -a <new_ip,new_uuid>

A summary of the new topology will be displayed, along with a listing of the existing cluster and the proposed changes. Unless you specified the -y (always answer "yes") option, you will be asked to confirm the changes before any actual work begins.

Once the changes are confirmed, IRONdb will start rebalancing the data. The new topology hash will be shown once it has been calculated.

After all nodes complete the rebalance, they will switch their active topology from old to new. Each node will then kick off a delete operation of any metrics that no longer belong on that node.

To view progress, retrieve the via GET of /rebalance/state:

curl http://<node>:<api-port>/rebalance/state

To abort the rebalance, and remove the rebalance state file:

/irondb/localstate/.rebalance_state.json

on every node, including on any new nodes that were added. Then start the service again.

Shrinking a cluster is basically the same as adding, above:

Create a new topology with the nodes that should remain.

Load the new topology to all nodes, including the ones that are leaving.

Start rebalance to new topology on all nodes, including the ones that are leaving.

We will use the cluster resizing tool, /opt/circonus/bin/resize_cluster. Run this with the -h option for details on the available options.

Choose a node that will be staying in the cluster and note its IP address and API port. This will be the "bootstrap node" from which the resize tool will fetch the existing cluster's topology. If you do not specify the API port, the default (8112) will be assumed.

Note the node UUID of the node(s) that will be removed.

Run the resize tool, specifying the removed nodes by their node UUID. If removing more than one node, specify the -r option multiple times.

/opt/circonus/bin/resize_cluster -b <bootstrap_node_ip[:port]> -r <removed_uuid>

A summary of the new topology will be displayed, along with a listing of the existing cluster and the proposed changes. Unless you specified the -y (always answer "yes") option, you will be asked to confirm the changes before any actual work begins.

Once you have confirmed the changes, IRONdb will start rebalancing the data. The new topology hash will be shown once it has been calculated.

To view progress, retrieve the via GET of /rebalance/state:

curl http://<node>:<api-port>/rebalance/state

To abort the rebalance, and remove the rebalance state file:

/irondb/localstate/.rebalance_state.json

on every node, including on any leaving nodes. Then start the service again.

Reference to available options and arguments.

To obtain the most current usage summary: /opt/circonus/sbin/snowthd -h

-k <start|stop|status>

status will exit 0 if the process is running, non-zero otherwise.

These options are mutually exclusive of one another. One or the other is required.

-i <uuid>

Identify this node with <uuid>. This is the normal mode of operation.

-e

Boot the node in ephemeral mode. Ephemeral nodes are read-only participants in the cluster. They do not appear in the cluster topology, and do not accept incoming metrics, but may be used to read metric data from other nodes and perform intensive computation that would add unreasonable load to the main nodes.

These options imply foreground operation and perform a specific task, then exit. They are only valid in identified mode (-i).

-m

Merge text reconstitution files. DEPRECATED

-H

Merge histogram reconstitution files. DEPRECATED

The above 2 options were used in a previous version of the reconstitute process and are no longer strictly required. They may be removed in a future version.

These options imply foreground operation and perform a specific task, then exit. They are only valid in identified mode (-i).

-r text/metrics

Repair text inventory.

-r text/changelog

Repair text datastore.

-r hist/metrics

Repair histogram inventory.

-r hist/<rollup>

Repair a histogram rollup. The value is one of the existing histogram rollup periods from the config file, e.g., hist/60 to repair the 1-minute histogram rollups.

-j

Journal-drain mode. Does not start a network listener, so this node will appear "down" to its peers, but will send any pending journal data to them. This is useful if you are planning to retire and replace a cluster node, and want to ensure that it has sent all outgoing journal data without accepting any new input.

These determine optional behavior, and are not required.

-c <file>

Load configuration from <file>. Must be a full path. If not specified, the default path is /opt/circonus/etc/snowth.conf.

-d

Activate additional debug logging. Use with caution; can generate a large volume of logs.

-D

Stay in the foreground, rather than daemonizing. If specified once, run as a single process with no watchdog. If specified twice, run as a parent/child pair, with the parent (watchdog) process in the foreground.

See the for details on foreground operation.

-u <user>

Drop privileges after start and run as this user.

-g <group>

Drop privileges after start and run as this group.

-t <path>

Chroot to <path> for operation. Ensure that log file locations may be accessed within the chrooted environment.

-l <logname>

Enable <logname>, even if it is disabled in the configuration file. The specified log stream must exist.

-L <logname>

Disable <logname>, even if it is enabled in the configuration file. The specified log stream must exist.

These operations are used when .

-B

Enable reconstitute mode.

-T <topo_hash>

Reconstitute from this remote/foreign topology. Used when creating a new cluster from an existing one.

-O <ip>[:<port>]

Bootstrap remote reconstitute from this node in the source cluster. Used when creating a new cluster from an existing one. The reconstituting node will fetch information about the source cluster's topology from this node, but actual metric data will be fetched from all source cluster nodes.

-A <type>

Reconstitute one type of data, or all if the option is omitted. May be specified multiple times to reconstitute multiple data types.

-S <node_uuid>

Skip the specified node(s) when pulling data for reconstitute. This is useful if a node is unavailable at the time a reconstitute is started. May be specified multiple times to skip more than one node. Use with caution. If the number of skipped nodes exceeds the number of data copies, the reconstitute may be incomplete.

IRONdb has native endpoints for accepting OpenTSDB-style data.

There are 2 methods for ingesting OpenTSDB data into IRONdb:

RESTful HTTP POST of OpenTSDB JSON formatted datapoint(s)

Network socket listener akin to the normal OpenTSDB telnet method

For the HTTP method, POST a JSON object (or an array of JSON objects) to the RESTful API endpoint (see the section below - Writing OpenTSDB with HTTP). Each datapoint should be encoded as follows:

At least one tag key/value pair is required. Multiple datapoints can be sent as a JSON array, separated by commas, and the entire POST enclosed in square brackets.

For example:

In the case of the telnet method, telnet put commands in the normal OpenTSDB format are accepted:

put<space>metric_name<space>timestamp<space>value<space>tag_key=tag_value{<space>tag_key2=tag_value2...<space>tag_keyn=tag_valuen}

At least one tag key/value pair must be included. For example:

put my.metric.name<space>1480371755<space>12345.56<space>datacenter=east

If you desire higher resolution data capture, you can suffix the timestamp with a period, followed by the number of milliseconds in the second, or simply just use 13 numeric digits without the period (the last three digits will become the millseconds). For example:

put my.metric.name<space>1480371964.123<space>12345.56<space>datacenter=east

Or just:

put my.metric.name<space>1480371964123<space>12345.56<space>datacenter=east

These two examples mean 123 milliseconds into the timestamp 1480371964 or November 28, 2016 10:26:04 and 123ms PM UTC

Note that, while it resembles a floating point number, this is not a float.

For data safety reasons, we recommend that you use the RESTful POST interface to send OpenTSDB-formatted JSON data. The network socket listener provides no feedback to the sender about whether or not data was actually ingested (or indeed even made it off the sender machine and was not stuck in an outbound socket buffer) because there is no acknowledgement mechanism on a raw socket.

The HTTP interface, on the other hand, will provide feedback about whether data was safely ingested and will not respond until data has actually been written by the underlying database.

Both of the interfaces require you to namespace your OpenTSDB data. This lets you associate a UUID/Name and numeric identifier with the incoming metrics. This is useful, for example, if you want to use a single IRONdb installation to service multiple different internal groups in your organization but keep metrics hidden across the various groups.

All metrics live under a numeric identifier (you can think of this like an account_id). Metric names can only be associated with an "account_id". This allows you have separate client instances that segregate queries for metric names, or combine them all together under a single "account_id", or even separate your internal groups but recombine them under the client for visualization purposes. It's really up to you.

Furthermore, IRONdb requires associating incoming OpenTSDB data with a UUID and Name to make OpenTSDB data match data ingested from native sources more closely on the Apica platform. We hide the complexity of this on the rendering side, so you only have to worry about this mapping on the ingestion side. This UUID can be created using uuidgen on a typical UNIX(like) system or via any external tool or website that generates UUIDs.

When we store these metric names inside IRONdb, we prefix them with our standard collection category ("reconnoiter" will be automatically assigned) and the "Name" of the check. You can see this in the examples below in more detail.

Adding these additional fields allow us to disambiguate metric names from potential duplicate names collected from other sources.

OpenTSDB ingestion will, by default, accept timestamps up to 1 year in the past. This value may be changed through .

OpenTSDB data is sent by POSTing a JSON object or an array of JSON objects using the format described above to the OpenTSDB ingestion endpoint:

http://<irondb_machine:port>/opentsdb/<account_id>/<uuid>/<check_name>

For example:

http://192.168.1.100:4242/opentsdb/1/8c01e252-e0ed-40bd-d4a3-dc9c7ed3a9b2/dev

This will place all metrics under account_id 1 with that UUID and call them dev.

http://192.168.1.100:4242/opentsdb/1/45e77556-7a1b-46ef-f90a-cfa34e911bc3/prod

This will place all metrics under account_id 1 with that UUID and call them prod.

The network listener requires that we associate an account_id, uuid, and name with a network port. This is added to the during initial installation, for the default OpenTSDB text protocol port (4242). Additional stanzas may be added, associating different IDs with different ports to segregate incoming traffic.

You can then use:

to send metrics to IRONdb, and it will store the datapoint under the supplied metric name with the account, uuid, and name that was provided by the configuration for the port that was used.

The /fetch API provides fast, one-request access to common complex data extraction requirements. It allows for fetch submissions in both FlatBuffers and JSON formats, and returns DF4 output format available in both FlatBuffers and JSON encoding.

API description: See "Retrieving and Transforming Data" under Developer API

Numeric (kind = numeric)

average - the average of measurements in the period.

sum - the sum of measurements in the period.

count - the number of measurements in the period.

Histogram (kind = histogram)

none - pass the input through unmodified.

count - the number of samples in each histogram.

rate - the number of samples per second in each histogram (count/period).

Text (kind = text)

none - pass the input through unmodified.

count - return a numeric count of the number of text entries in the period.

count_cumulative - return a cumulative count of text entries starting at zero for the first period requested.

pass - pass the inputs to outputs unmodified

method_params none

Inputs can be numeric, histogram, or text.

groupby_mean - group inputs and calculate a mean over the grouping

method_params a list of tag categories on which to perform grouping

Inputs must be numeric.

groupby_sum - group inputs and calculate a sum over the grouping

method_params a list of tag categories on which to perform grouping

Inputs must be numeric.

groupby_merge - group inputs and merge into a histogram stream

method_params a list of tag categories on which to perform grouping

Inputs must be either numeric or histogram.

mean - calculate the mean across input streams

method_params none

Inputs must be numeric.

merge - group inputs and merge into a histogram stream

method_params none

Inputs must be either numeric or histogram.

sum - calculate the sum across input streams

method_params none

Inputs must be numeric.

topk - filter a set of inputs to the top K

method_params : [ K, <mech>, <mech_param> ]

Inputs must be either numeric or histogram.

Allowable mech values are mean (default), max, or quantile

Fetches Graphite-style data. The data returned is always average data and this endpoint will scale the rollup_span to match the time range of data requested.

See .

rm -f /irondb/logs/snowth.lockBASE_DATASET=$(zfs list -H -o name /irondb)zfs destroy -r $BASE_DATASET/text

zfs destroy -r $BASE_DATASET/hist_ingest

zfs destroy -r $BASE_DATASET/hist_rollup

zfs destroy -r $BASE_DATASET/localstate

zfs destroy -r $BASE_DATASET/raw_db

zfs destroy -r $BASE_DATASET/surrogate_db

zfs destroy -r $BASE_DATASET/metadata

zfs destroy -r $BASE_DATASET/metric_name_db

zfs destroy -r $BASE_DATASET/nntbs/opt/circonus/bin/irondb-start -B<node ip address>:<node port>/#reconstituteview<node ip address>:<node port>/stats.json/opt/circonus/bin/irondb-start -BReconstitute Finished!{

"db": {

"raw": {

"put`latency": {

"_type": "h",

"_value": [ (histogram values) ]

}

}

}

}db`raw`put`latency{

"latency|ST[app:snowth,db-impl:nom,db-type:raw,operation:put,snowth-node-id:(node-uuid),units:seconds]": {

"_type": "h",

"_value": [ (histogram values) ]

}

}<generic image="monitor" name="monitor">

<config>

<uuid>00000000-0000-0000-0000-000000000000</uuid>

<account_id>1</account_id>

<period>60s</period>

</config>

</generic>curl 'http://127.0.0.1:8112/find/<account_id>/tags?query=and(__check_uuid:<check_uuid>)'curl 'localhost:8112/find/1/tags?query=and(__check_uuid:d8c204ed-c2b6-4704-b6ec-f87787aad21f,db-type:raw,operation:put,__name:latency)'[

{

"uuid": "d8c204ed-c2b6-4704-b6ec-f87787aad21f",

"check_name": "irondb-monitor",

"metric_name": "latency|ST[app:snowth,db-impl:nom,db-type:raw,operation:put,snowth-node-id:12c07a06-2662-4ceb-86a8-ccd05eef0f48,units:seconds]",

"category": "reconnoiter",

"type": "histogram",

"account_id": 1

}

]curl 'localhost:8112/histogram/1557934740/1557934799/60/d8c204ed-c2b6-4704-b6ec-f87787aad21f/latency%7CST%5Bapp%3Asnowth%2Cdb-impl%3Anom%2Cdb-type%3Araw%2Coperation%3Aput%2Csnowth-node-id%3A12c07a06-2662-4ceb-86a8-ccd05eef0f48%2Cunits%3Aseconds%5D'[

[

1557934740,

60,

{

"+75e-005": 1,

"+79e-005": 2,

"+82e-005": 2,

"+83e-005": 1,

"+84e-005": 1,

"+86e-005": 2,

"+88e-005": 1,

"+89e-005": 3,

"+90e-005": 1,

"+92e-005": 2,

"+93e-005": 2,

"+95e-005": 1,

"+10e-004": 11,

"+11e-004": 7,

"+12e-004": 7,

"+13e-004": 10,

"+14e-004": 8,

"+15e-004": 5,

"+16e-004": 12,

"+17e-004": 15,

"+18e-004": 5,

"+19e-004": 5

}

]

]M TIMESTAMP UUID NAME TYPE VALUEM 1512691226.137 example.com`http`c_123_987654::http`1b988fd7-d1e1-48ec-848e-55709511d43f duration I 1H1 TIMESTAMP UUID NAME HISTOGRAMH1 1512691200.000 example.com`ping_icmp`c_123_45678::ping_icmp`c50361d8-7565-4f04-8128-3cd2613dbc82 maximum AAFQ/gABfind:histogram('foobar', 'and(hello:world)') | label('%cn')# One time setup

sudo yum install node bzip2

sudo npm install -g typescript tslint

yarn

# Build

yarn build

# Test

yarn test<rebalance concurrency="1"/>counter - the positive rate of change of the measurements in the period.counter_stddev - the standard deviation of the positive rate of change of the measurements in the period.

derivative - the rate of change of the measurements in the period.

derivative_stddev - the standard deviation of the rate of change of the measurements in the period.

stddev - the standard deviation of measurements in the period.

count_above - calculate the number of samples that are greater than the supplied parameter.transform_params the threshold value for measurements.

count_below - calculate the number of samples that are less than the supplied parameter.

transform_params the threshold value for measurements.

inverse_percentile - calculate what percentage of the population is smaller than the supplied parameter (output in [0,100] or NaN)

transform_params the threshold value for measurements.

inverse_quantile - calculate what ratio of the popultion is smaller than the supplied parameter (output in [0,1] or NaN)

transform_params the threshold value for measurements.

percentile - produce a numeric quantile after dividing the parameter by 100.

transform_params a value in the range [0,100]

quantile - produce a numeric quantile

transform_params a value in the range [0,1]

sum - approximate sum of the samples in each histogram

mean - approximate mean value of the samples in each histogram

count_distinct - return a numeric count of the number of unique text entries in the period.

count_distinct_cumulative - return the total distinct values seen from the beginning of the first period requested through the end of the current period.

count_transition - return a numeric count of the number of times a text entries changes during the period. The first period's first value does not count as a transition.

count_transition_cumulative - return the cumulative transitions seen from the beginning of the first period requested through the end of the current period.

rate - return the text entries per second seen in each period.

rate_distinct - return the unique text entries per second in each period.

rate_distinct_cumulative - return the newly unique text entries per second in each period.

rate_transition - return the number of text entry changes per second in each period.

quantilemechmech_paramCIRCONUS_NAME is what determines both the account and check to which this metric belongs. It has the form c_ACCOUNT-ID_CHECK-BUNDLE-ID::MODULE. ACCOUNT-ID is the most significant, as this is how metric data is partitioned within IRONdb.lower-cased-uuid is the check UUID, lower-cased.

l: int64

L: uint64

n: double

s: string

The use of --pure-python is provided for convenience; However, the native C module is recommended for best performance.

In your graphite's local_settings.py:

Where irondb-host is the DNS or IP of an IRONdb node, port (usually 8112) is the listening port for IRONdb, and <account> is some integer you have been ingesting your metrics under (see Namespacing in the IRONdb docs).

If the IRONdb cluster is using TLS, be sure to use https:// with IRONDB_URLS above, and use port 8443.

optional_query_prefix can be used to prefix all operations with a fixed name. You can use this optional prefix to simplify metric names stored in IRONdb. If you just want raw names as stored in IRONdb, you can omit this last URL section (see Graphite Rendering in the IRONdb documentation).

If you have a multi-node IRONdb installation (likely), you should specify multiple URLS (one for each node in the cluster), or place the IRONdb installation behind a load balancer. For example,

NOTE: the IRONDB_URLS is a python list and therefore must end with a trailing comma on the last entry.

If you are pointing graphite at a Circonus SaaS account, set the token to a

valid Circonus Auth Token and set the URL to the public API URL

(https://api.circonus.com/irondb/graphite). Your tokens can be managed under

your account at https://login.circonus.com/user/tokens. Note that the storage

finder will not work if the application 'graphite' is not approved. If you find

it not working, visit your tokens page and refresh to find the graphite

application and manually approve it.

IRONDB_BATCH_SIZE is optional and will default to 250. Batch size is used to perform multi-fetch from the IRONdb backend if you use graphs with wildcard expansions in the datapoints.

IRONDB_USE_DATABASE_ROLLUPS is an optional Python boolean (True|False) and will default to True. IRONdb can automatically choose the "step" of the returned data if this param is set to True. Calculation for "step" is based on the time span of the query. If you set this to False, IRONdb will return the minimum rollup span it is configured to return for all data. This can result in slower renders as much more data will be returned than may be necessary for rendering. However, some graphite functions (like summarize) require finer resolution data in order to group data properly.

IRONDB_USE_ACTIVITY_TRACKING is an optional Python boolean (True|False) and will default to True. IRONdb supports tracking of metric activity without the expense of reading all known time series data to find active ranges.

IRONDB_TIMEOUT_MS is optional and will default to 10000. With IRONdb >= 0.9.8 this will set an absolute timeout after which queries will be cut off.

IRONDB_CONNECTION_TIMEOUT_MS is optional and will default to 3005.

IRONDB_MAX_RETRIES is optional and will default to 2. Only failures to connect are retried (see IRONDB_CONNECTION_TIMEOUT_MS). Timeouts or other failures are not retried to prevent thundering herd problems.

IRONDB_QUERY_LOG is optional and will default to False. Will log out all queries to the IRONdb backend nodes into the info.log if this is set to True.

IRONDB_ZIPKIN_ENABLED is optional and will default to False. Will send Zipkin headers to the IRONdb nodes that are being queried.

IRONDB_ZIPKIN_EVENT_TRACE_LEVEL is optional and will default to 0. If IRONDB_ZIPKIN_ENABLED is set to False, this flag will do nothing. If it is set to True, this will send headers to the IRONdb nodes that will enable additional event tracing. Right now, the only acceptable values are 0 (off), 1 (basic tracing), and 2 (detailed tracing). 2 can potentially cause performance issues - use this level sparingly. Only recommended for when trying to debug something specific.

0.0.1 (2016-11-10): initial version.

0.0.2 (2017-05-25): fix queries where there is no data for one or more of the requested time series

0.0.3 (2017-06-27): Add CIRCONUS_TOKEN support and IRONDB_USE_DATABASE_ROLLUPS

0.0.4 (2017-06-28): Pass more info back to IRONdb on fetches so the database doesn't have to re-lookup metric ownership among the nodes

0.0.5 (2017-09-01): Retry requests to IRONdb against different nodes if we encounter connection issues or timeouts on requests

0.0.6 (2017-09-11): Pass a timeout to IRONdb on all fetch operations. This requires IRONdb >= 0.9.8

0.0.7 (2017-09-13): Use a separate connection timeout on all fetch operations.

0.0.8 (2017-09-13): Introduce IRONDB_MAX_RETRIES

0.0.9 (2017-11-13): API fix for large fetches, reduce errors by catching more connection failure conditions, thanks @cbowman0

0.0.10 (2017-11-21): Fix sending of X-Snowth-Timeout header

0.0.11 (2018-04-09): Allow handling Flatbuffer data coming from IRONdb

0.0.12 (2018-04-16): Performance improvements to Flatbuffer via native C modules instead of native Python. Requires flatcc

0.0.13 (2018-04-17): Fix memory leaks in native C Flatbuffer module

0.0.14 (2018-07-31): Graphite 1.1 compatibility including tag support

0.0.15 (2018-09-14): IRONDB_QUERY_LOG support

0.0.16 (2018-12-06): Improve error handling. Fix tag categories

0.0.17 (2019-01-23): Fix flatcc native Flatbuffer module

0.0.18 (2019-02-20): Improve FlatBuffers support. Fix metric prefix handling. Use Graphite error log

0.0.19 (2019-03-05): Improve FlatBuffer error handling. Add Zipkin header support

0.0.20 (2019-05-03): Don't issue IRONdb series requests for empty find results, Add IRONDB_ROLLUP_WINDOW setting, Respect IRONDB_BATCH_SIZE setting, fix fetcher keyerror, use first start time when all series arrive late

0.0.21 (2019-05-14): Fix memory leak introduced in 0.0.20

This content was sourced from the README on GitHub.

zpool get freeingzfs create $BASE_DATASET/hist_ingest

zfs create $BASE_DATASET/hist_rollup

zfs create $BASE_DATASET/text

zfs create $BASE_DATASET/localstate

zfs create $BASE_DATASET/metadata

zfs create -o logbias=throughput $BASE_DATASET/raw_db

zfs create -o logbias=throughput $BASE_DATASET/surrogate_db

zfs create $BASE_DATASET/metric_name_db

zfs create $BASE_DATASET/nntbsmkdir /irondb/hist_ingest/<node_id>

mkdir /ironeb/hist_rollup/<node_id>

mkdir /irondb/text/<node_id>

mkdir /irondb/raw_db/<node_id>

mkdir /irondb/surrogate_db/<node_id>

mkdir /irondb/metadata/<node_id>

mkdir /irondb/metric_name_db/<node_id>

mkdir /irondb/nntbs/<node_id>chown -R nobody:nobody /irondb/TARGET`MODULE`CIRCONUS_NAME`lower-cased-uuidprocess control flags:

-k start start the process (default)

-k stop stop a running process

-k status report the status via exit code

mutually exclusive flags:

-e boot this node ephemerally (compute node)

-i <uuid> identify this node

standalone loader flags for use with -i

-m merge text reconstitution files (deprecated)

-H merge hist reconstitution files (deprecated)

standalone maintenance flags for use with -i

-r text/metrics repair text inventory

-r text/changelog repair text datastore

-r hist/metrics repair hist inventory

-r hist/<period> repair hist rollup for configured <period>

-j only write journal data to other nodes

optional behavior flags:

-c <file> load config from <file> (full path)

default: /opt/circonus/etc/snowth.conf

-d debugging

-D foreground operations (don't daemonize)

-u <user> run as <user>

-g <group> run as <group>

-t <path> chroot to <path>

-l <logname> enable <logname>

-L <logname> disable <logname>

-q disable gossip on this node

reconstitute parameters:

-B Reconstitute mode

-T <topo_hash> Reconstitute new cluster from remote topology

-O <ip>[:<port>] Reconstitute from remote host

-A <type> Reconstitute type

Acceptable values: nntbs,text,hist,raw,surrogate

May be specified multiple times

All if omitted

-S <node_uuid> Skip/ignore this node during reconstitute

May be specified multiple times

this usage message:

-h usage{

"metric": "metric_name",

"timestamp": timestamp,

"value": value,

"tags": {

"tag_key": "tag_value",

"tag_key2": "tag_value2",

...

"tag_keyn": "tag_valuen"

}

}[{

"metric": "my.metric.name",

"timestamp": 1544678300,

"value": 637,

"tags": {

"datacenter": "east"

}

},

{

"metric": "myother.metric.name",

"timestamp": 1544688100,

"value": 3475,

"tags": {

"datacenter": "west"

}

}] <listener address="*" port="4243" type="opentsdb">

<config>

<check_uuid>549a90ee-c5bb-4b0f-bcb4-e942b0503f85</check_uuid>

<check_name>myothercheckname</check_name>

<account_id>1</account_id>

</config>

</listener>echo "my.metric.name.one `date +%s` 1 cpu=1" | nc 4243$ git clone http://github.com/circonus-labs/graphite-irondb

$ cd graphite-irondb$ sudo python setup.py install --with-flatcc=PREFIX$ sudo python setup.py install --pure-python STORAGE_FINDERS = (

'irondb.IRONdbFinder',

)

TAGDB = 'irondb.IRONdbTagFetcher'

IRONDB_URLS = (

'http://<irondb-host>:<port>/graphite/<account>/<optional_query_prefix>',

)

# Optional. You need CIRCONUS_TOKEN if you are using this with Circonus SaaS.

# If you are not using Circonus SaaS you can omit this setting

CIRCONUS_TOKEN = '0005cc1f-5b27-4b60-937b-7c73a25dfef7'

IRONDB_BATCH_SIZE = 250

IRONDB_USE_DATABASE_ROLLUPS = True

IRONDB_USE_ACTIVITY_TRACKING = True

IRONDB_TIMEOUT_MS = 10000

IRONDB_CONNECTION_TIMEOUT_MS = 3005

IRONDB_MAX_RETRIES = 2

IRONDB_QUERY_LOG = False

IRONDB_URLS = (

'http://host1:8112/graphite/1',

'http://host2:8112/graphite/1',

)CIRCONUS_TOKEN = '<your-token-uuid>'

IRONDB_URLS = (

'https://api.circonus.com/irondb/graphite',

)API description: See "Internal Observability" in the Administration API

This API call is for viewing the system state of the current node.

Data will be returned as a JSON document. The fields in this document are described below.

identity : The UUID that identifies this node.

current : The current topology in which this node resides.

next : The next topology for this node. A value of "-" indicates there is no next topology.

This API call retrieves gossip information from a IRONdb node. Gossip data is information on how the nodes are communicating with each other and if any nodes are behind other nodes with regards to data replication.

Data will be returned as an array of JSON objects. The format of these objects is described below.

API description: See "Internal Observability" in the

Each object in the array has the following form:

id : The UUID of the node whose gossip information follows.

gossip_time : The last time, in seconds, that this node received a gossip message.

gossip_age : The difference, in seconds, between the last time this node received a gossip message and the current time.

This API call retrieves gossip information from a Snowth node. Gossip data is information on how the nodes are communicating with each other and if any nodes are behind other nodes with regards to data replication.

Data will be returned an XML object. The format of this object is described below.

API description: See "Internal Observability" in the

<nodes> : The top-level element for the topology.

<node> : The container for all the information for a single node in the cluster. There will x of these elements, where "x" is the number of nodes in the cluster.

Attributes:

IRONdb is a drop-in replacement for Graphite's Whisper database.

It supports ingestion from Carbon sources like carbon-relay and carbon-c-relay. Graphite-irondb is a storage finder plugin that allows IRONdb to seamlessly integrate with an organization's existing Graphite-web deployment.

The IRONdb Relay is a scalable, drop-in replacement for carbon-relay or carbon-c-relay.

The format for ingestion is the typical Carbon plaintext format:

dot.separated.metric.name<space>12345.56<space>1480371755

If you desire higher resolution data capture, IRONdb does support a variant of the unix epoch timestamp (3rd field) where you can suffix the timestamp with a period, followed by the number of milliseconds in the second. For example:

dot.separated.metric.name<space>12345.56<space>1480371964.123

This example means 123 milliseconds into the timestamp 1480371964 or November 28, 2016 10:26:04 and 123ms PM UTC

Note that, while it resembles a floating point number, this is not a float.

Starting with IRONdb release 0.12 you can also ingest tagged graphite data. Tagged graphite data has the following format:

dot.separated.metric.name;category1=value1;category2=value2

Where tags are appended to the normal name and are separated by semicolons (;).

For more info on the graphite tag format see: .

Graphite ingestion into IRONdb requires namespacing your graphite data. This lets you associate a UUID/Name and numeric identifier with the incoming metrics. This is useful, for example, if you want to use a single IRONdb installation to service multiple different internal groups in your organization but keep metrics hidden across the various groups.